Paging David Guetta fans: this week we have an interview with the team that built the site behind his latest ad campaign. On the site, fans can record themselves singing along to his single, “This One’s For You” and build an album cover to go with it.

Under the hood, the site is built on Lambda, API Gateway, and CloudFront. Social campaigns tend to be pretty spiky – when there’s a lot of press a stampede of users can bring infrastructure to a crawl if you’re not ready for it. The team at parall.ax chose Lambda because there are no long-lived servers, and they could offload all the work of scaling their app up and down with demand to Amazon.

James Hall from parall.ax is going to tell us how they built an internationalized app that can handle any level of demand from nothing in just six weeks.

The Interview

Parallax is a digital agency; we provide a range of services including application development, security and design services. We have 25 full-time employees as well as some external contractors. We specialise in providing massively scaleable solutions, with a particular focus on sports, advertising, and FMCG (Fast Moving Consumer Goods).

The app is part of a huge marketing campaign for David Guetta’s new release “This One’s For You” – the official anthem of the UEFA EURO 2016 finals. We’re inviting fans – hopefully a million by March – into a virtual recording studio and giving them the chance to sing along with Guetta. Not only will some of their voices be included on the final song, we also create personalised album artwork with their name and favourite team. This can be shared among friends, increasing engagement and allowing more fans to become a part of the campaign. The whole site has been built in twelve languages, incorporating video content of the DJ himself and a chance to win a trip to Paris. You can check it out over at thisonesforyou.com.

Image credit: Parallax Agency Ltd.

Image credit: Parallax Agency Ltd.

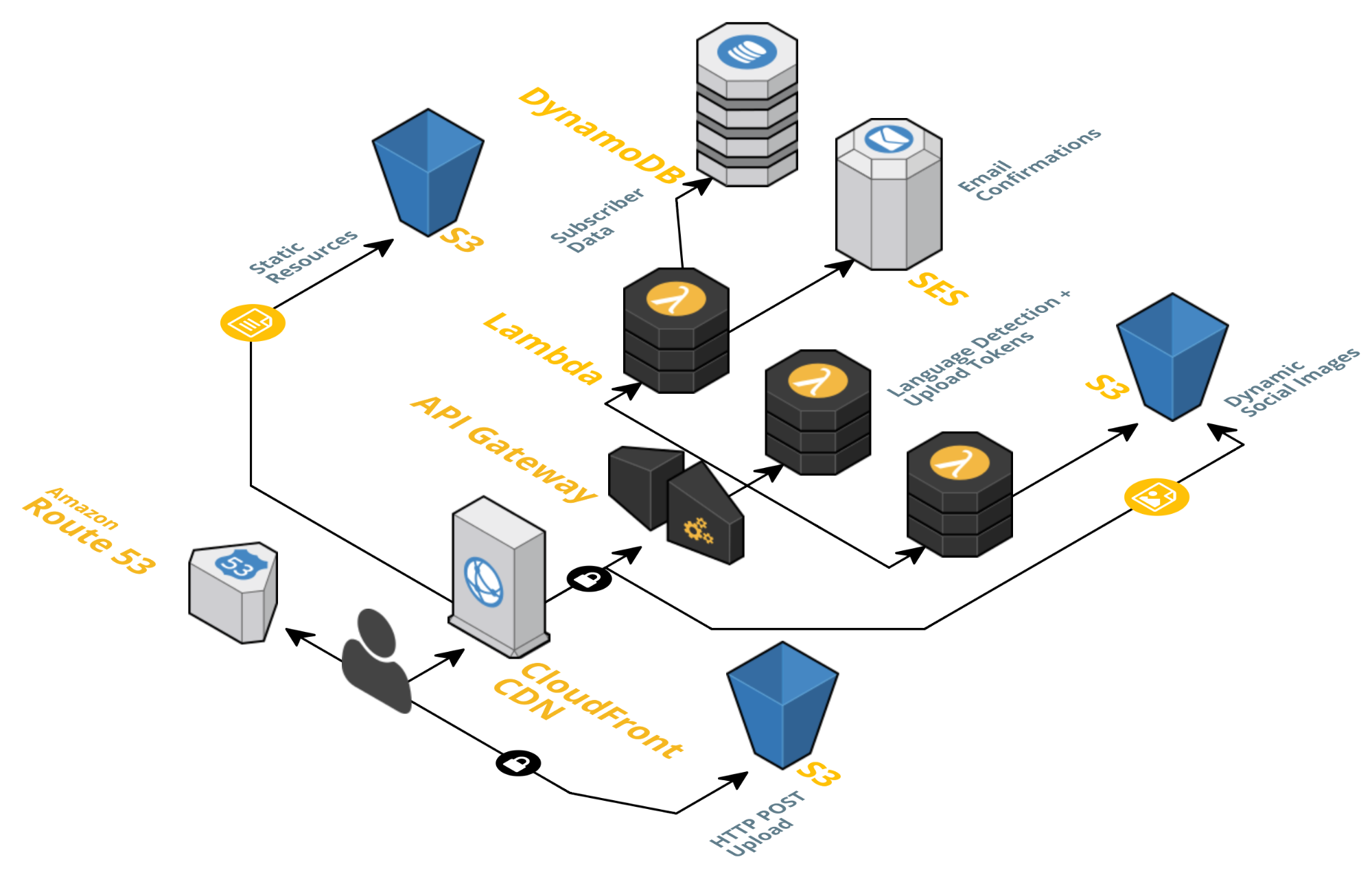

On the backend we use a variety of technologies to deliver a completely scaleable architecture. We use Serverless (formerly JAWS) and CloudFormation to orchestrate the entire platform in code.

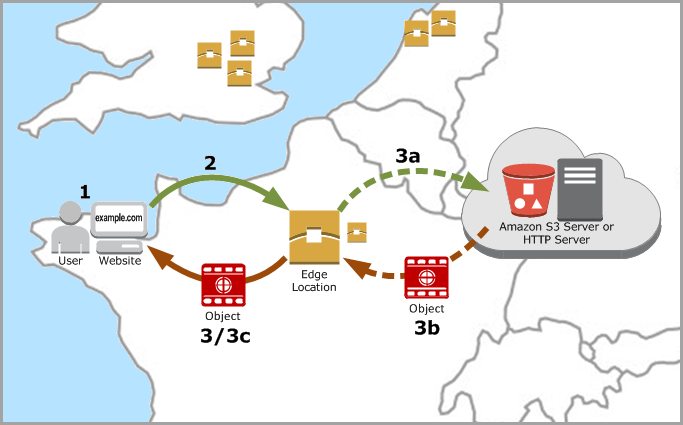

Requests are routed via CloudFront, with static assets cached at Edge locations nearest to the user requesting them. When the page first loads, everything is entirely static. A UUID is generated in the client browser - this is used for all future API request and allows us to associate different actions in the page together without having to serve cookies. The randomness of this value is important, so the library uses timing and crypto functions (where available) to derive random seed data.

Image credit: Amazon Web Services

Image credit: Amazon Web Services

The origin of the static assets is a simple S3 bucket. These are uploaded via a script on deployment.

A language detection endpoint then sends back the Accept-Language header and also country code that the request was received from. This is using a basic Lambda function. Another endpoint adds subscriber data to DynamoDB, as well as sending them a welcome email via Amazon SES. To make the recording work, we have an endpoint which issues S3 tokens for a path named after the UUID provided. Uploads are then directly posted to S3 from the browser.

The most useful application of Lambda was the image generation endpoint. We take an image of the user’s favourite team flag, overlay a picture of Guetta, then add their name. This is then uploaded to S3, along with a Facebook and Twitter sized graphic. We also upload a static HTML file, which points to this unique graphic. This ensures when people share the URL it will display their custom artwork. We use Open Graph image tags in the page for this.

On the frontend, we used Brunch to compile the handlebars templates, compile SASS, prefix the CSS and any other build tasks.

The recording interface itself has three implementations. The “A-grade” experience is the WebRTC recorder, which uses the new HTML5 functionality to record directly from your microphone. We then have a Flash fallback for the same experience, this is for desktop browsers without WebRTC support. Then finally we have a file input that prompts users to record on mobile.

Yes, absolutely. Our regular stack is LAMP, built on top of CloudFront, Elastic Load Balancers and EC2 nodes. This would have scaled, but it would have been much more difficult to scale as quickly and as simply as it is using a Lambda-based architecture. We’d have had to build a queueing system for the image generation, then spin up EC2 nodes dedicated to making those images based on the throughput.

Writing a simple Lambda function and letting Amazon do all the hard work seemed like the obvious choice.

The team at Parallax consisted of five people. Tom Faller was the Account Manager, responsible for the day-to-day running of the project. I was the backend developer creating the Lambda functions and designing the cloud architecture. Amit Singh was primarily frontend, but worked on many of the glue parts between the interface and the backend JS. Chris Mills was QA and Systems, and linked to the Serverless (formerly JAWS) project in the first place. Jamie Sefton was another JS developer and worked on integrating compatibility fallbacks into the codebase, including a Flash-based and input-based recording experience as a fallback for devices that don’t support WebRTC. I’ve had experience with Lambda before, but it was new for the other team members.

I’d say around six or seven weeks in total, though there was plenty of research and prototyping done beforehand to arrive at the correct architecture design. For the amount of scalability required, it would have taken much longer to ensure it was just as robust using our “home turf”.

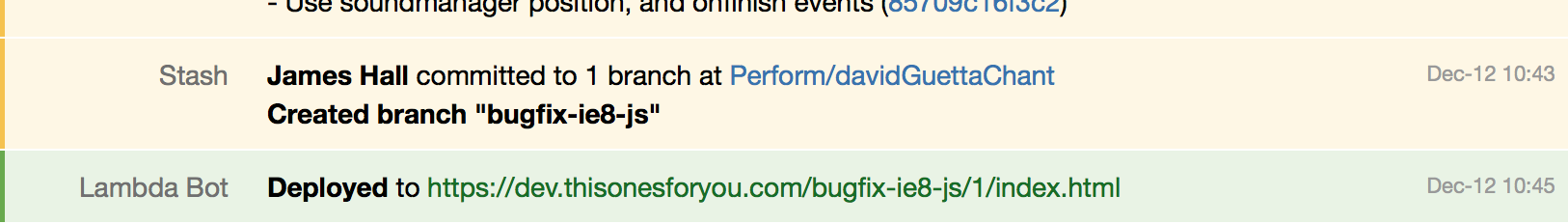

The application is deployed from Atlassian Bamboo. The codebase lives in Stash. Each and every branch gets its own deployment URL which is posted into our company chat once built. This lets us quickly test and decide if changes are mergeable.

Image credit: Parallax Agency Ltd.

Image credit: Parallax Agency Ltd.

For frontend JS errors we use Bugsnag; for this type of application this is the easiest way to see if there’s a problem anywhere in your stack. If error spikes are bigger than traffic spikes you know something has gone wonky. We usually use NewRelic for the backend, but as all the backend code lives in Lambda functions we’ve opted to use CloudWatch.

Yeah absolutely. Every commit and branch pushed to Stash is deployed to a

testing environment. For the static assets and frontend JavaScript, this uses

bamboo, and creates a new folder for every branch and build number. For the

lambda functions, those were updated to each environment using serverless dash.

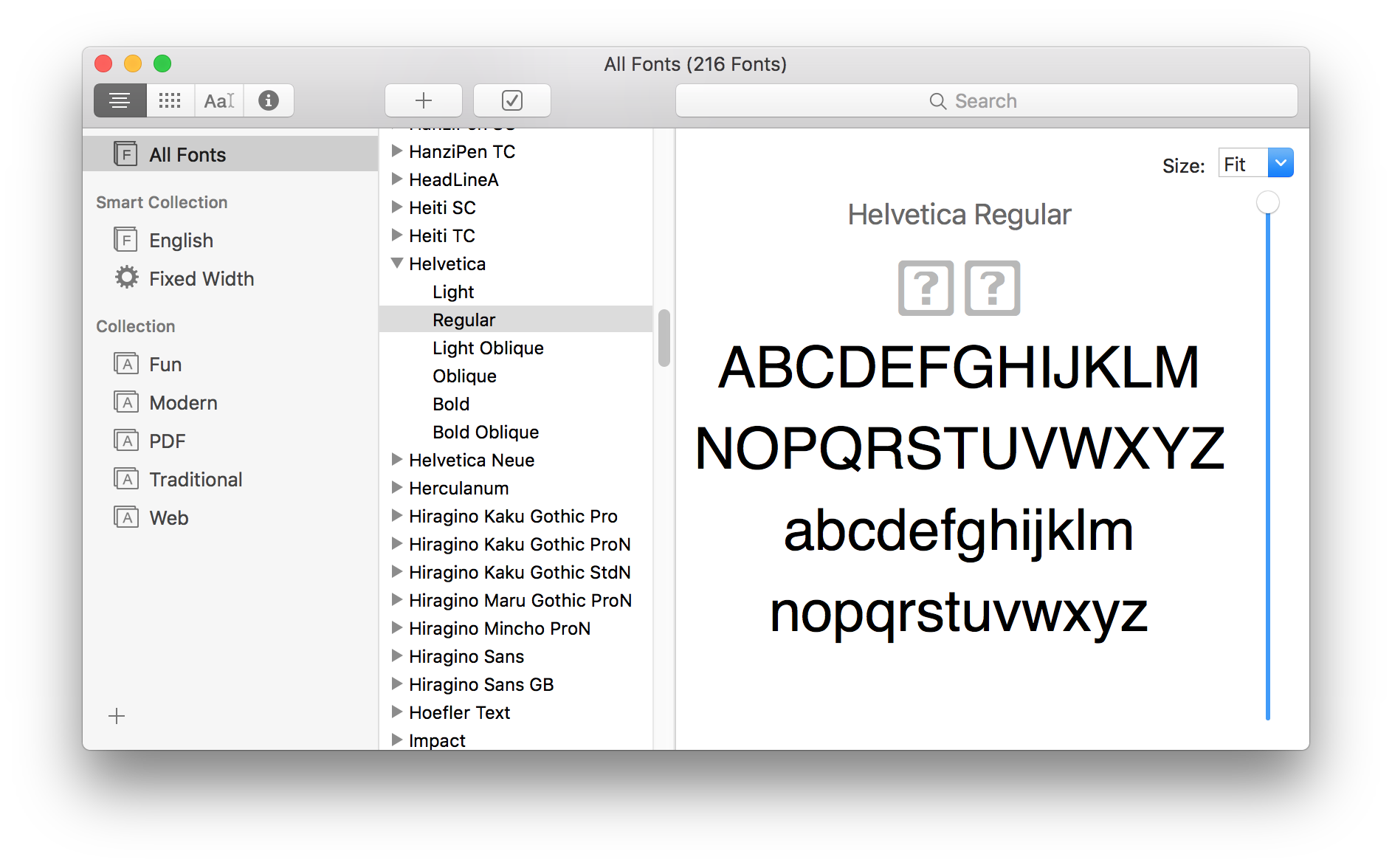

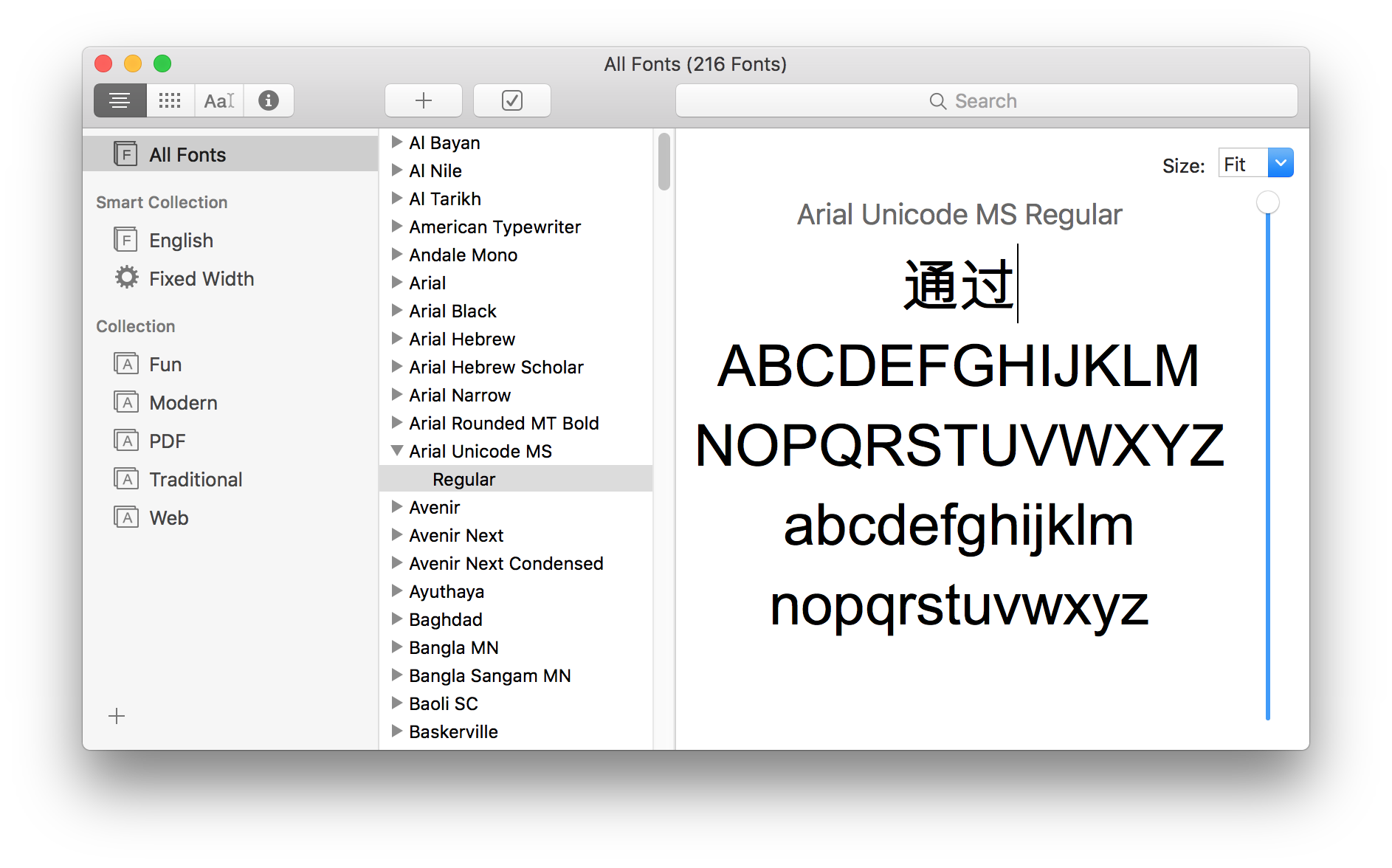

The server Lambda runs on lacks any fonts with Japanese and Chinese characters, which is to be expected, but wasn’t something we’d planned for in the development. When an operating system renders a particular font and it doesn’t contain a particular glyph, it will fallback to the system installed multilingual Unicode font. This happens on the web automatically, but inside ImageMagick on a bare server this won’t happen. This means we had to ship a large Unicode font in our endpoint. We reduced the performance impact of this by only routing non-Latin names to the Unicode endpoint.

Helvetica, for example, doesn’t include any Asian Glyphs

Helvetica, for example, doesn’t include any Asian Glyphs

But Arial does

But Arial does

There’s some issues with multiple branches and the early Serverless (formerly JAWS) framework. Say if we’re adding an endpoint in one branch, and another in another branch, they can’t both be deployed to the same environment stage. This is something we worked around, and we’ll be looking to contribute some helpful tools back to Serverless to deal with this.

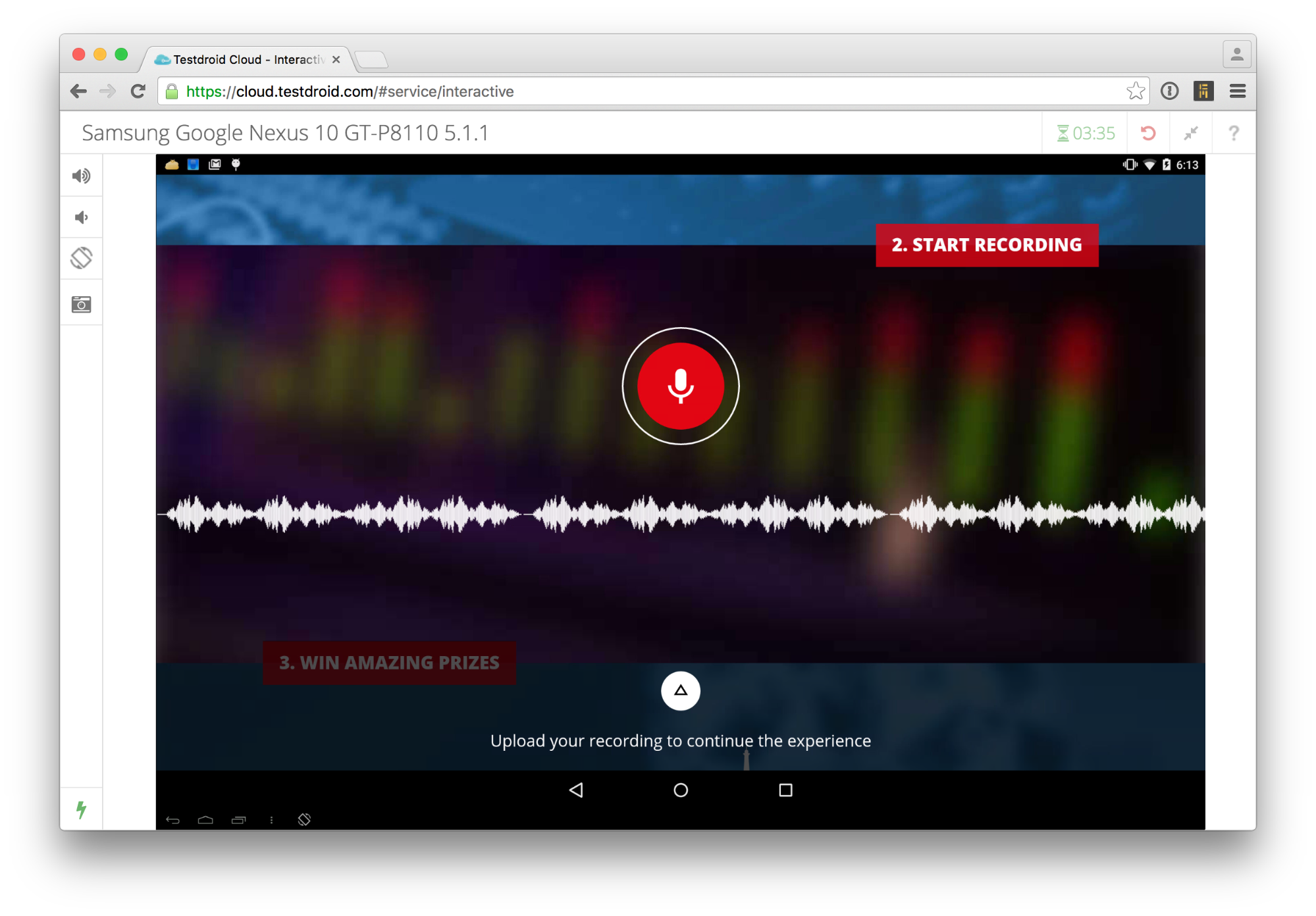

There are a couple of testing tools I’d highly recommend. Cameras and microphones behave very differently in emulators - sometimes they’re not even simulated at all! This means we had to use real devices for our testing. We used a Vanamco Device Lab along with five devices that we consider to be a good spread. We use this alongside Ghostlab which allows us to have the same page open on all devices and keep them in sync. It includes a web inspector to tweak CSS and run debugging JS. Then to increase Android coverage we used testdroid. This is a brilliant service that allows you to use real devices remotely. Open the camera app and you can see inside a data centre. I’ve been eagerly awaiting a testdroid engineer to poke his head into a rack, but have so far been disappointed!

Testdroid using a Google Nexus 10

Testdroid using a Google Nexus 10

Wrapping Up

Thanks again to James Hall for the inside view on CI, mobile testing, and Unicode workarounds for all-Lambda backends. To learn more about the Serverless Application Framework, check out their site or their gitter.im chatroom.

Disclosure: I have no relationship to Parallax Agency Ltd., but they build cool projects and this interview covers just one of them.

Keep up with future posts via RSS. If you have suggestions, questions, or comments feel free to email me ryan@serverlesscode.com.